4K Gaming In 2020 Big Debate: Is It Worth It?

It doesn't really come as a surprise to us when customers ask about the possibilities of 4K, especially with the ever-rising popularity of 4K in film, television and streaming services. Moreover, with next-gen consoles on the horizon too, the notion of 4K gaming has never been more prominent than it is now. Over the last few weeks, I've had a number of questions asked about what monitor someone should get for a new set up, and more specifically, whether they should be looking at the 4K resolution screen-space as an option.

So, I thought I'd try to cover the whole debate in today's post, and weigh up all the different elements of the answer - because surprise, surprise, it isn't as simple as just a yes or no. As always, there are various different considerations to make, and what better place to start than by covering one of the most overlooked (and possibly controversial) points when it comes to higher resolutions, since it's the one thing which will vary most from person to person?

Can we even tell the difference?

One thing that still amazes me today, is how many people don't actually go to see what a new screen could look like for themselves. In today's society where the online, one-click culture is so prevalent, it's easy to forget that many outlets will actually have display models for you to see for yourself. This is the first place to start - if you've never seen a 4K display panel before, you should go and find a few to look at and compare to the standard 1080p and 1440p monitors. Most people will be able to see a difference, but there are a few people that can't always tell. It's an astounding notion to those of us who do notice the substantial improvement in image quality, but if you do happen to fall into the former category, there's really not a lot of point in splashing out on a 4K screen.

The other thing also worth considering is what sort of screen-size you're looking to purchase. Even keen-eyed individuals will sometimes struggle to tell the difference between a 4K image on a 32" screen versus a 1440p image on a 24" screen. This, quite simply put, is because the smaller the screen, the higher the density of pixels per square inch, meaning a better perceived quality overall. This is why 4K has taken off so much quicker in the film and TV industry (as well as the upcoming consoles), as most people tend to have much larger screens in their living rooms than they would at their desk. Those bigger screens stretch the pixels further, decreasing perceived image quality overall, but is then countered by the increased pixel count offered by 4K resolutions.

Of course, none of this even matters if you don't have the hardware to generate a 4K image...

What kind of hardware do you need to run games at 4K?

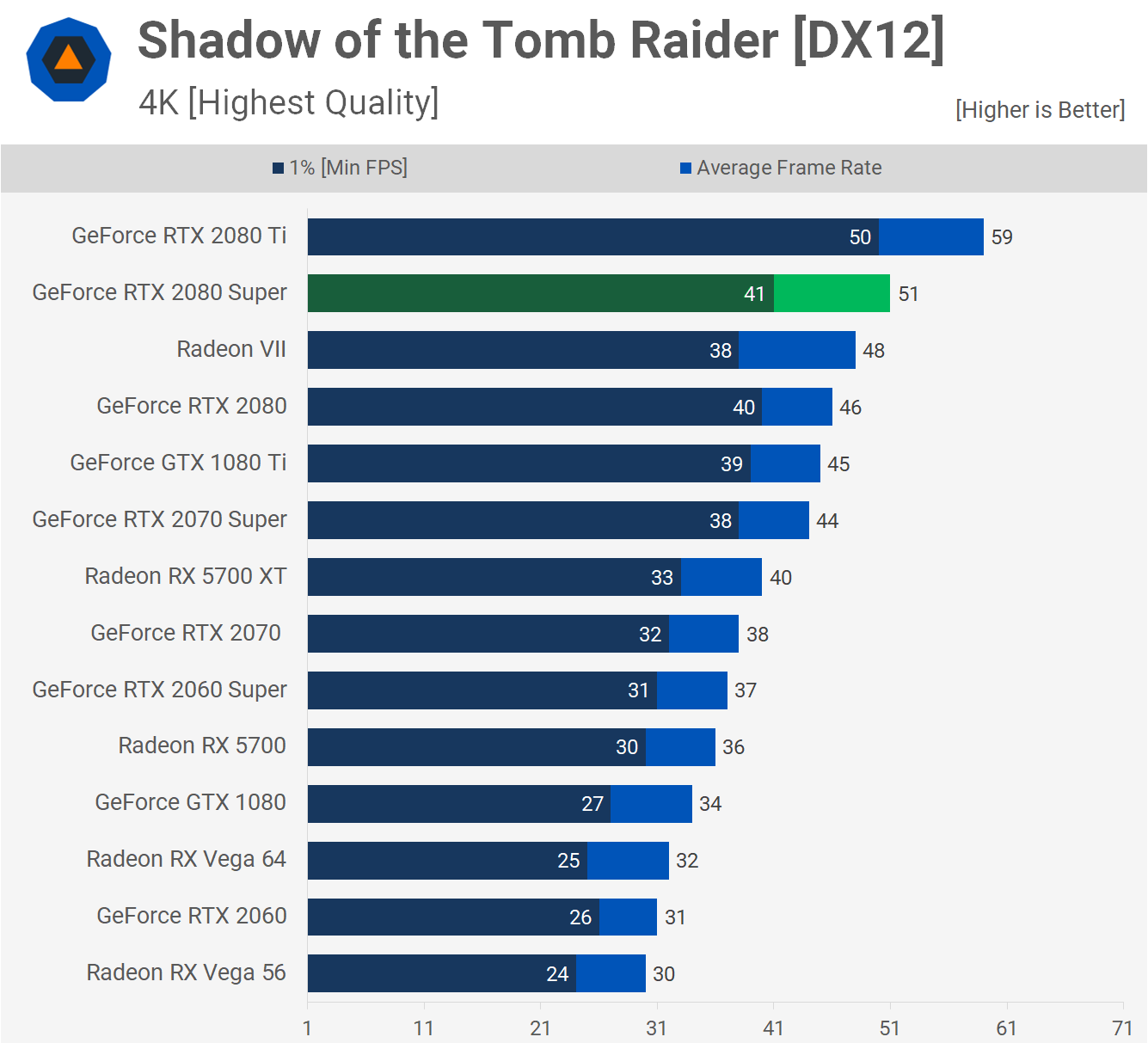

Well, you might be surprised to find that technically, you could get by just fine with an RTX 2060 Super, paired with either a Ryzen 5 3600, or an i5 10600K, which would handle most big-name games at 4K resolutions. But that does come with a catch. The caveat being you'll likely be pegged at around 40 frames a second, tops. Now, similarly to the earlier point we covered with resolution versus screen-size, if frame rate is something you don't generally notice, then that will probably be more than ample, as on the whole, you'll be able to maintain 30fps as a minimum (which is often pointed out as being the most that the human eye can see). However, if like many of us here in the office, frame rate is something you're quite sensitive to, then 30fps won't really cut it, and you'll be wanting to achieve a minimum of at least 60fps.

This is often where the 4K dream starts to crumble - as you increase either frame rate or resolution, you also increase the demand on your hardware. As such, increasing both simultaneously steepens an already exponential curve. With today's available hardware, even an RTX 2080 Ti paired with a CPU as powerful as either the Ryzen 9 3900X or i9 10900K can struggle to maintain that 60fps target at 4K. That said, with the announcement of the higly-anticipated RTX 3000 Series of GPUs, which will be hitting the shelves as soon as September 17th, that could well be changing - but more on that later.

Assuming you aren't in the position to pick up a brand new prebuilt Gaming PC any time soon, but are still considering the possibility of a 4K monitor, you could argue that there are other ways to help regain those precious frames without decreasing resolution or upgrading your hardware, which leads us to our next consideration...

Optimising in-game settings

Not everyone has the spare cash to shell out for something as extravagant as an RTX 2080 Ti, or possibly even those new RTX 3000 cards for that matter, let alone a well-matched processor to pair with them too. So what else could be done to achieve 60fps at 4K on less capable lower-end hardware? The easiest way? Tweak the settings in the games you play.

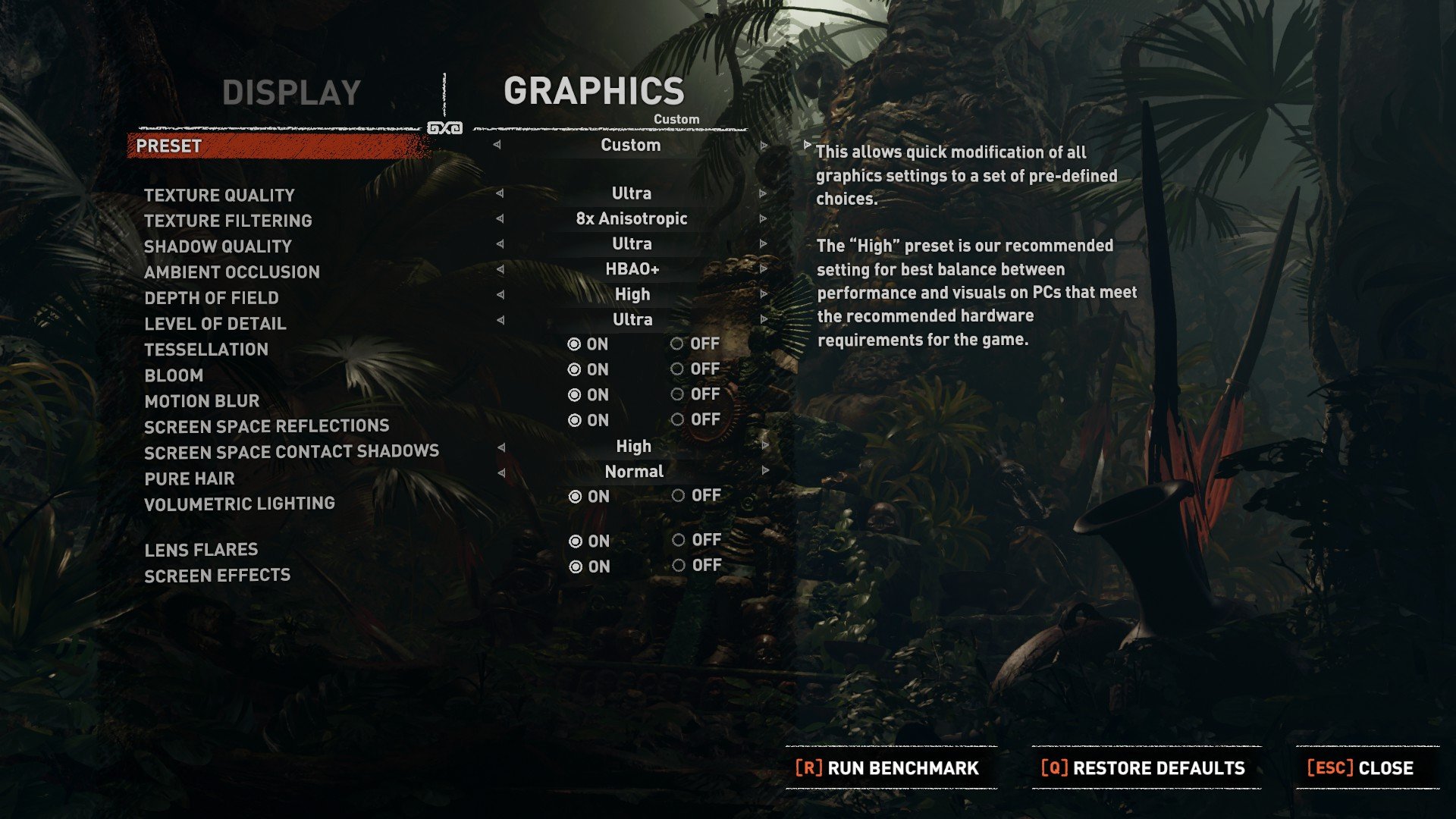

If you're used to playing titles at high or ultra settings at an equally high frame rate in 1080p, then stepping up to 4K can be done if you're willing to lower some of those in-game settings - things like shadows, anti-aliasing, bloom and various other post-processing effects, among others. If well optimised and tested thoroughly, it's well within the realms of possibility to claw back a solid amount of fps, up to as much as even 30 or 40 frames. The problem, however, is that the graphical fidelity of the game will naturally start to degrade, and will be far more noticeable in some titles than it will in others, meaning you'd need to constantly micro-manage settings across various games. In essence, this means a great deal of headache and faffing, which may or may not be worth it.

Ultimately, if upping resolution makes the game look crisper and more detailed, but lowering graphical settings diminishes fidelity, what's the point? A crisp image that looks like trash still looks like trash, but a 'softer' image that looks fantastic still looks fantastic.

However, this could potentially change with NVIDIA's new RTX 3000 series. Aside from offering significant, next-gen hardware performance gains, these cards will be arriving alongside NVIDIA's new and improved DLSS (Deep Learning Super-Sampling). DLSS is a very clever functionality offered by some titles (and potentially many more in the future) which utilises AI and Deep Learning to allow users to play at "artificial" high resolutions without suffering huge performance loss. In fact, many people report that DLSS can actually produce 4K resolutions on low-end hardware, with perceived image quality that is better than the original at 4K native. Impressive stuff.

So, from the hardware side of things, 4K on high settings at a 60fps minimum could become significantly easier to achieve in the near future, but is it the only alternative to 1080p?

The 1440p compromise - is it a compromise at all?

Some say that 1440p is the perfect compromise for 4K. And I would tend to agree, if it weren't for the fact that I don't feel it's much of a compromise at all - I have a 4K TV in my livingroom, and a 1440p monitor on my desk. But seeing as the monitor is about a fifth of the size, the added pixel density makes for an overall image quality that isn't far from the native resolution of that TV.

In fact, when it comes to games in particular, the TV only just ekes out my monitor when it comes to image sharpness. But, unlike when simply watching something, be it a film, series or YouTube video (when, for me, the resolution difference is most prominent), when I'm playing a game and am completely immersed in the videogame environment, that focus on interactivity draws my attention away from the minor differences in resolution. The images are still much clearer and the quality much higher than that of my old 1080p monitor, and I also didn't need to pay an arm and a leg to fetch the hardware capable of producing a 1440p image. I also haven't seen a single title fall below 60fps at the highest in-game settings (well, except for a few edge cases where the games have been poorly optimised by the devs, *cough* Ark: Survival Evolved *cough*). And for me personally, I am particularly sensitive to frame drops below that 60fps mark, so would sooner have a stable frame rate just over that figure to ensure I wouldn't constantly feel as though my games were stuttering or lagging. But, at the end of the day, that's me, not you.

The verdict

As of writing, given the situation with current hardware limits, the yet to be proved new generation of hardware, and the associated costs when aiming for 60fps at 4K, I would argue that 1440p isn't a compromise at all, rather it should be a given. It's the next logical step for anyone looking to move up from 1080p, as, for me personally, I believe the step up from 1080p to 1440p is far more noticeable than say, from 1440p to 4K, especially so when looking at gaming on standard or even ultra-wide monitors.

What's more, with the release of those various new GPUs over the course of the end of this year, which are set to offer performance gains on existing hardware by substantial margins, this hopefully will mean those existing hardware limitations should finally be slashed and as such, I believe that gaming on high-refresh, 1440p ultra-settings will likely become the norm. The cost of graphics cards capable of achieving this have plummeted significantly, both new hardware and the RTX 2000 series alike, and with them, we may well see those associated costs of 1440p drop, and potentially even 4K too.

With that said, if you're intending to game on a whopping great big TV or monitor, and are looking to invest your savings in some new hardware, then 4K is probably the given for you. But if you're looking for a substantial, affordable upgrade from 1080p that will see you smiling for a good few years (by which time 4K may well be proven to be even more achievable, and affordable), then I would highly recommend and can personally vouch for the 1440p route instead.

How do you feel about the 4K gaming scene? What are your thoughts? Let us know down in the comments.

Posted in TechShot

Published on 08 Sep 2020

Last updated on 09 Feb 2023